It’s Not a Chatbot And That Might Be This AI Tutor’s Superpower

Ryan Meuth believes there is tremendous potential for AI tutors. The professor in the School of Computing and Augmented Intelligence in the Fulton Schools of Engineering at Arizona State University is particularly interested in the potential of AI to help the university’s online students who take condensed seven-and-a-half-week courses.

“These are students who have jobs and families and lives,” Meuth says, noting that they are often doing school work late at night or early in the morning, when there might not be someone around to answer their questions. “They are the students who are in the most need of support at generally the worst times of day to get live support.”

Meuth believes the right AI tutor can help these students. “It can give them that immediate feedback and assistance, so they can keep moving forward in that compressed timeline,” he says.

To that end, Meuth and Arizona State University have partnered with Wiley, a publishing and education company, to create a computer science tutor that can help students who get stuck while working in Wiley’s zyBooks coding lab, which ASU uses for classes.

The AI tutor, like any good AI tutor, is designed to help students find the answer rather than provide the answer to them. But creating a tool that actually accomplishes this is easier said than done. So instead of launching this school-wide, Meuth and the team from Wiley are conducting several pilot studies and improving the tutor as they go to ensure its long-term efficacy.

Here’s a look at what they’ve learned so far.

An AI Tutor But Not A Chatbot

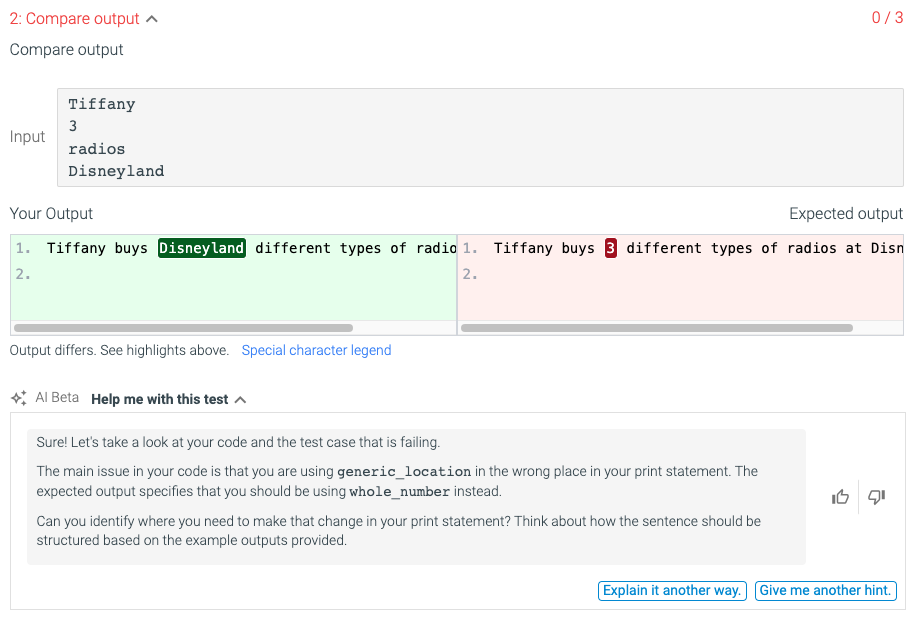

When we picture an AI tutor many of us envision a ChatGPT-style chatbot interface through which the student can ask any question and the AI will respond with a generative response. That’s not what is happening with this zyBooks AI tutor, however.

“One of the keys to the design is that it’s not an open-text field,” says Lyssa Vanderbeek, Wiley group vice president for courseware. If a student is struggling with a problem, the tutor will appear and provide six different input options, for instance, offering to give the student hint or provide an alternative explanation.

This gives the AI tutor a more controlled interface and can keep it focused on helping students solve a problem rather than giving away the answer, Meuth adds. “No matter what sort of protections that we put in as a driving prompt, students, if they have the ability to converse with the thing, will trick it into giving you the answer,” Meuth says.

Teaching An AI Tutor

During an initial pilot study of 150 students this fall, the AI tutor wasn’t particularly effective at first. At first it was not giving the most helpful hints to students, so it had to be programmed to give better hints, according to Vanderbeek.

“We’ve really identified the highest value hints,” Vanderbeek says. “Because if a student is stuck, there may be ten things you could suggest, but really, what are the top one or two things that will get the student unstuck?”

She adds, “Through the feedback loop we’ve had with Ryan and his team, we’ve been able to refine the tutor so that it can better identify the most valuable thing to say to the student.”

On his end, Meuth also noticed that the tutor tended to bounce between two extremes: either giving away the answer or providing a hint that was too vague to be helpful. He says the Wiley team figured how to tune to that sweet spot in between — where it’s actionable, but it’s not the answer.

After these improvements, a second cohort of 350 students began using the AI tutor this fall, and so far they’re using it ten times as frequently. “Students are coming back to it more often, and the responses are highly targeted to help students get over the barrier, ” Meuth says.

Next Phase of Research

Despite being pleased with how the AI tutor is working, Meuth and the Wiley team are still studying how to best use it.

“We just got approval to start this next experiment, and it’s going to be a control group study,” Meuth says. One group will have access to the tutor and another will not. Meuth and colleagues will compare the academic achievement between the two student groups, and will also be evaluating the self-efficacy of the students’ attitudes toward programming. They want to know whether students who use the tutor are more or less confident.

Though Meuth loves the way this AI tutor works, he is dedicated to using it with students in a thoughtful way backed by research. “We’re concerned about things like students becoming over-reliant on help or things like that,” he says. “So we want to make sure that the value that students are getting out of utilizing the tutor is contributing in a healthy way.”